4.1: Introduction and Network Service Models

| We saw in the

previous chapter that the transport layer provides communication service

between two processes running on two different hosts. In order to provide

this service, the transport layer relies on the services of the network

layer, which provides a communication service between hosts. In particular,

the network layer moves transport-layer segments from one host to another.

At the sending host, the transport-layer segment is passed to the network

layer. It is then the job of the network layer to get the segment to the

destination host and pass the segment up the protocol stack to the transport

layer. Exactly how the network layer moves a segment from the transport

layer of an origin host to the transport layer of the destination host

is the subject of this chapter. We will see that unlike the transport layers,

the network layer involves each and every host and router in the network.

Because of this, network-layer protocols are among the most challenging

(and therefore interesting!) in the protocol stack.

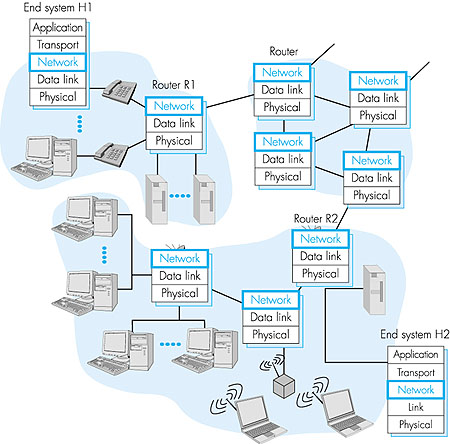

Figure 4.1 shows a simple network with two hosts (H1 and H2) and several routers on the path between H1 and H2. The role of the network layer in a sending host is to begin the packet on its journey to the receiving host. For example, if H1 is sending to H2, the network layer in host H1 transfers these packets to its nearby router R2. At the receiving host (for example, H2), the network layer receives the packet from its nearby router (in this case, R2) and delivers the packet up to the transport layer at H2. The primary role of the routers is to "switch" packets from input links to output links. Note that the routers in Figure 4.1 are shown with a truncated protocol stack, that is, with no upper layers above the network layer, because (except for control purposes) routers do not run transport- and application-layer protocols such as those we examined in Chapters 2 and 3.

The role of the network layer is thus deceptively simple--to transport packets from a sending host to a receiving host. To do so, three important network-layer functions can be identified:

4.1.1: Network Service ModelWhen the transport layer at a sending host transmits a packet into the network (that is, passes it down to the network layer at the sending host), can the transport layer count on the network layer to deliver the packet to the destination? When multiple packets are sent, will they be delivered to the transport layer in the receiving host in the order in which they were sent? Will the amount of time between the sending of two sequential packet transmissions be the same as the amount of time between their reception? Will the network provide any feedback about congestion in the network? What is the abstract view (properties) of the channel connecting the transport layer in the sending and receiving hosts? The answers to these questions and others are determined by the service model provided by the network layer. The network-service model defines the characteristics of end-to-end transport of data between one "edge" of the network and the other, that is, between sending and receiving end systems.Datagram or Virtual Circuit? Perhaps the most important abstraction provided by the network layer to the upper layers is whether or not the network layer uses virtual circuits (VCs). You may recall from Chapter 1 that a virtual-circuit packet network behaves much like a telephone network, which uses "real circuits" as opposed to "virtual circuits." There are three identifiable phases in a virtual circuit:

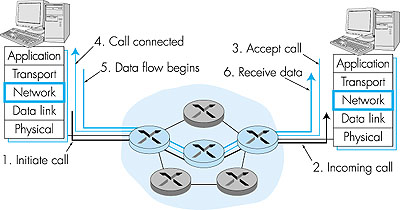

The messages that the end systems send to the network to indicate the initiation or termination of a VC, and the messages passed between the switches to set up the VC (that is, to modify switch tables) are known as signaling messages and the protocols used to exchange these messages are often referred to as signaling protocols. VC setup is shown pictorially in Figure 4.2. ATM, frame relay and X.25, which will be covered in Chapter 5, are three other networking technologies that use virtual circuits.

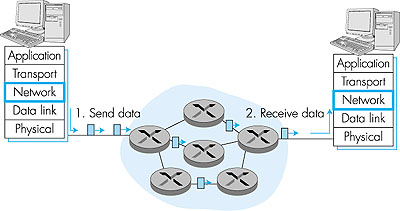

With a datagram network layer, each time an end system wants to send a packet, it stamps the packet with the address of the destination end system, and then pops the packet into the network. As shown in Figure 4.3, this is done without any VC setup. Packet switches in a datagram network (called "routers" in the Internet) do not maintain any state information about VCs because there are no VCs! Instead, packet switches route a packet toward its destination by examining the packet's destination address, indexing a routing table with the destination address, and forwarding the packet in the direction of the destination. (As discussed in Chapter 1, datagram routing is similar to routing ordinary postal mail.) Because routing tables can be modified at any time, a series of packets sent from one end system to another may follow different paths through the network and may arrive out of order. The Internet uses a datagram network layer. [Paxson 1997] presents an interesting measurement study of packet reordering and other phenomena in the public Internet.

You may recall from Chapter 1 that a packet-switched network typically offers either a VC service or a datagram service to the transport layer, but not both services. For example, we'll see in Chapter 5 that an ATM network offers only a VC service to the transport layer. The Internet offers only a datagram service to the transport layer. An alternate terminology for VC service and datagram service is network-layer connection-oriented service and network-layer connectionless service, respectively. Indeed, VC service is a sort of connection-oriented service, as it involves setting up and tearing down a connection-like entity, and maintaining connection-state information in the packet switches. Datagram service is a sort of connectionless service in that it does not employ connection-like entities. Both sets of terminology have advantages and disadvantages, and both sets are commonly used in the networking literature. In this book we decided to use the "VC service" and "datagram service" terminology for the network layer, and reserve the "connection-oriented service" and "connectionless service" terminology for the transport layer. We believe this distinction will be useful in helping the reader delineate the services offered by the two layers. The key aspects of the service model of the Internet and ATM network architectures are summarized in Table 4.1. We do not want to delve deeply into the details of the service models here (it can be quite "dry" and detailed discussions can be found in the standards themselves [ATM Forum 1997]). A comparison between the Internet and ATM service models is, however, quite instructive. Table 4.1: Internet and ATM Network Service Models

The current Internet architecture provides only one service model, the datagram service, which is also known as "best-effort service." From Table 4.1, it might appear that best effort service is a euphemism for "no service at all." With best-effort service, timing between packets is not guaranteed to be preserved, packets are not guaranteed to be received in the order in which they were sent, nor is the eventual delivery of transmitted packets guaranteed. Given this definition, a network that delivered no packets to the destination would satisfy the definition of best-effort delivery service. (Indeed, today's congested public Internet might sometimes appear to be an example of a network that does so!) As we will discuss shortly, however, there are sound reasons for such a minimalist network service model. The Internet's best-effort only service model is currently being extended to include so-called integrated services and differentiated service. We will cover these still-evolving service models later in Chapter 6. Let us next turn to the ATM service models. We'll focus here on the service model standards being developed in the ATM Forum [ATM Forum 1997]. The ATM architecture provides for multiple service models (that is, the ATM standard has multiple service models). This means that within the same network, different connections can be provided with different classes of service. Constant bit rate (CBR) network service was the first ATM service model to be standardized, probably reflecting the fact that telephone companies were the early prime movers behind ATM, and CBR network service is ideally suited for carrying real-time, constant-bit-rate audio (for example, a digitized telephone call) and video traffic. The goal of CBR service is conceptually simple--to make the network connection look like a dedicated copper or fiber connection between the sender and receiver. With CBR service, ATM packets (referred to as cells in ATM jargon) are carried across the network in such a way that the end-to-end delay experienced by a cell (the so-called cell-transfer delay, CTD), the variability in the end-end delay (often referred to as "jitter" or cell-delay variation, CDV), and the fraction of cells that are lost or delivered late (the so-called cell-loss rate, CLR) are guaranteed to be less than some specified values. Also, an allocated transmission rate (the peak cell rate, PCR) is defined for the connection and the sender is expected to offer data to the network at this rate. The values for the PCR, CTD, CDV, and CLR are agreed upon by the sending host and the ATM network when the CBR connection is first established. A second conceptually simple ATM service class is Unspecified bit rate (UBR) network service. Unlike CBR service, which guarantees rate, delay, delay jitter, and loss, UBR makes no guarantees at all other than in-order delivery of cells (that is, cells that are fortunate enough to make it to the receiver). With the exception of in-order delivery, UBR service is thus equivalent to the Internet best-effort service model. As with the Internet best-effort service model, UBR also provides no feedback to the sender about whether or not a cell is dropped within the network. For reliable transmission of data over a UBR network, higher-layer protocols (such as those we studied in the previous chapter) are needed. UBR service might be well suited for noninteractive data transfer applications such as e-mail and newsgroups. If UBR can be thought of as a "best-effort" service, then available bit rate (ABR) network service might best be characterized as a "better" best-effort service model. The two most important additional features of ABR service over UBR service are:

An excellent discussion of the rationale behind various aspects of the ATM Forum's Traffic Management Specification 4.0 [ATM Forum 1996] for CBR, VBR, ABR, and UBR service is [Garrett 1996]. 4.1.2: Origins of Datagram and Virtual Circuit ServiceThe evolution of the Internet and ATM network service models reflects their origins. With the notion of a virtual circuit as a central organizing principle, and an early focus on CBR services, ATM reflects its roots in the telephony world (which uses "real circuits"). The subsequent definition of UBR and ABR service classes acknowledges the importance of data applications developed in the data networking community. Given the VC architecture and a focus on supporting real-time traffic with guarantees about the level of received performance (even with data-oriented services such as ABR), the network layer is significantly more complex than the best-effort Internet. This, too, is in keeping with the ATM's telephony heritage. Telephone networks, by necessity, had their "complexity" within the network, since they were connecting "dumb" end-system devices such as a rotary telephone. (For those too young to know, a rotary phone is a nondigital telephone with no buttons--only a dial.)The Internet, on the other hand, grew out of the need to connect computers (that is, more sophisticated end devices) together. With sophisticated end-system devices, the Internet architects chose to make the network-service model (best effort) as simple as possible and to implement any additional functionality (for example, reliable data transfer), as well as any new application-level network services at a higher layer, at the end systems. This inverts the model of the telephone network, with some interesting consequences:

|