6.2: Streaming Stored Audio and Video

| In recent years,

audio/video streaming has become a popular application and a major consumer

of network bandwidth. This trend is likely to continue for several reasons.

First, the cost of disk storage is decreasing rapidly, even faster than

processing and bandwidth costs. Cheap storage will lead to a significant

increase in the amount of stored audio/video in the Internet. For example,

shared MP3 audio files of rock music via [Napster

2000] has become incredibly popular among college and high school students.

Second, improvements in Internet infrastructure, such as high-speed residential

access (that is, cable modems and ADSL, as discussed in Chapter 1), network

caching of video (see Section 2.2), and new QoS-oriented Internet protocols

(see Sections 6.5-6.9) will greatly facilitate the distribution of stored

audio and video. And third, there is an enormous pent-up demand for high-quality

video streaming, an application that combines two existing killer communication

technologies--television and the on-demand Web.

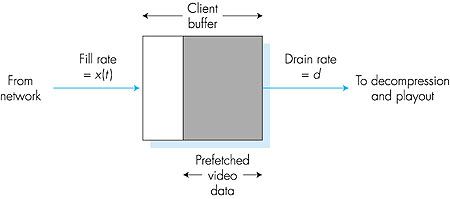

In audio/video streaming, clients request compressed audio/video files that are resident on servers. As we'll discuss in this section, these servers can be "ordinary" Web servers, or can be special streaming servers tailored for the audio/video streaming application. Upon client request, the server directs an audio/video file to the client by sending the file into a socket. Both TCP and UDP socket connections are used in practice. Before sending the audio/video file into the network, the file is segmented, and the segments are typically encapsulated with special headers appropriate for audio/video traffic. The Real-time protocol (RTP), discussed in Section 6.4, is a public-domain standard for encapsulating such segments. Once the client begins to receive the requested audio/video file, the client begins to render the file (typically) within a few seconds. Most existing products also provide for user interactivity, for example, pause/resume and temporal jumps within the audio/video file. This user interactivity also requires a protocol for client/server interaction. Real-time streaming protocol (RTSP), discussed at the end of this section, is a public-domain protocol for providing user interactivity. Audio/video streaming is often requested by users through a Web client (that is, browser). But because audio/video playout is not integrated directly in today's Web clients, a separate helper application is required for playing out the audio/video. The helper application is often called a media player, the most popular of which are currently RealNetworks' Real Player and the Microsoft Windows Media Player. The media player performs several functions, including:

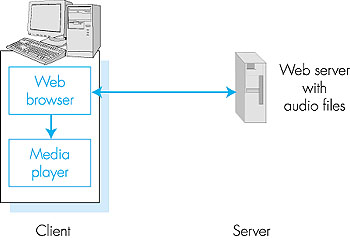

6.2.1: Accessing Audio and Video from a Web ServerStored audio/video can reside either on a Web server that delivers the audio/video to the client over HTTP, or on an audio/video streaming server that delivers the audio/video over non-HTTP protocols (protocols that can be either proprietary or open standards). In this subsection, we examine delivery of audio/video from a Web server; in the next subsection, we examine delivery from a streaming server.Consider first the case of audio streaming. When an audio file resides on a Web server, the audio file is an ordinary object in the server's file system, just as are HTML and JPEG files. When a user wants to hear the audio file, the user's host establishes a TCP connection with the Web server and sends an HTTP request for the object (see Section 2.2). Upon receiving a request, the Web server bundles the audio file in an HTTP response message and sends the response message back into the TCP connection. The case of video can be a little more tricky, because the audio and video parts of the "video" may be stored in two different files, that is, they may be two different objects in the Web server's file system. In this case, two separate HTTP requests are sent to the server (over two separate TCP connections for HTTP/1.0), and the audio and video files arrive at the client in parallel. It is up to the client to manage the synchronization of the two streams. It is also possible that the audio and video are interleaved in the same file, so that only one object need be sent to the client. To keep our discussion simple, for the case of "video" we assume that the audio and video are contained in one file. A naive architecture for audio/video streaming is shown in Figure 6.1. In this architecture:

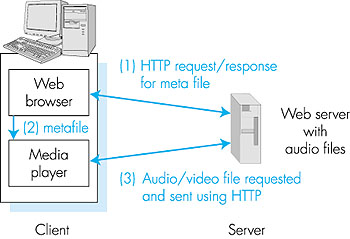

A direct TCP connection between the server and the media player is obtained as follows:

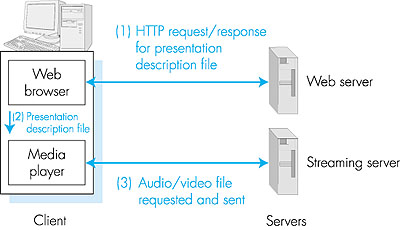

We have just learned how a meta file can allow a media player to dialogue directly with a Web server housing an audio/video. Yet many companies that sell products for audio/video streaming do not recommend the architecture we just described. This is because the architecture has the media player communicate with the server over HTTP and hence also over TCP. HTTP is often considered insufficiently rich to allow for satisfactory user interaction with the server; in particular, HTTP does not easily allow a user (through the media server) to send pause/resume, fast-forward, and temporal jump commands to the server. 6.2.2: Sending Multimedia from a Streaming Server to a Helper ApplicationIn order to get around HTTP and/or TCP, audio/video can be stored on and sent from a streaming server to the media player. This streaming server could be a proprietary streaming server, such as those marketed by RealNetworks and Microsoft, or could be a public-domain streaming server. With a streaming server, audio/video can be sent over UDP (rather than TCP) using application-layer protocols that may be better tailored than HTTP to audio/video streaming.This architecture requires two servers, as shown in Figure 6.3. One server, the HTTP server, serves Web pages (including meta files). The second server, the streaming server, serves the audio/video files. The two servers can run on the same end system or on two distinct end systems. The steps for this architecture are similar to those described in the previous architecture. However, now the media player requests the file from a streaming server rather than from a Web server, and now the media player and streaming server can interact using their own protocols. These protocols can allow for rich user interaction with the audio/video stream.

In the architecture of Figure 6.3, there are many options for delivering the audio/ video from the streaming server to the media player. A partial list of the options is given below:

6.2.3: Real-Time Streaming Protocol (RTSP)Many Internet multimedia users (particularly those who grew up with a remote TV control in hand) will want to control the playback of continuous media by pausing playback, repositioning playback to a future or past point of time, visual fast-forwarding playback, visual rewinding playback, and so on. This functionality is similar to what a user has with a VCR when watching a video cassette or with a CD player when listening to a music CD. To allow a user to control playback, the media player and server need a protocol for exchanging playback control information. RTSP, defined in RFC 2326, is such a protocol.But before getting into the details of RTSP, let us first indicate what RTSP does not do:

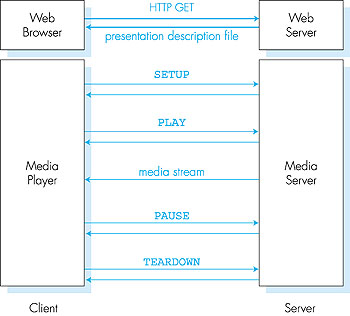

Recall from Section 2.3, that file transfer protocol (FTP) also uses the out-of-band notion. In particular, FTP uses two client/server pairs of sockets, each pair with its own port number: one client/server socket pair supports a TCP connection that transports control information; the other client/server socket pair supports a TCP connection that actually transports the file. The RTSP channel is in many ways similar to FTP's control channel. Let us now walk through a simple RTSP example, which is illustrated in Figure 6.5. The Web browser first requests a presentation description file from a Web server. The presentation description file can have references to several continuous-media files as well as directives for synchronization of the continuous-media files. Each reference to a continuous-media file begins with the URL method, rtsp://.

Below we provide a sample presentation file that has been adapted from [Schulzrinne 1997]. In this presentation, an audio and video stream are played in parallel and in lip sync (as part of the same "group"). For the audio stream, the media player can choose ("switch") between two audio recordings, a low-fidelity recording and a high-fidelity recording. <title>Twister</title> <session> <group language=en lipsync> <switch> <track type=audio e="PCMU/8000/1" src="rtsp://audio.example.com/twister/audio.en/lofi"> <track type=audio e="DVI4/16000/2" pt="90 DVI4/8000/1" src="rtsp://audio.example.com/twister/audio.en/hifi"> </switch> <track type="video/jpeg" src="rtsp://video.example.com/twister/video"> </group> </session>The Web server encapsulates the presentation description file in an HTTP response message and sends the message to the browser. When the browser receives the HTTP response message, the browser invokes a media player (that is, the helper application) based on the content-type field of the message. The presentation description file includes references to media streams, using the URL method rtsp://, as shown in the above sample. As shown in Figure 6.5, the player and the server then send each other a series of RTSP messages. The player sends an RTSP SETUP request, and the server sends an RTSP SETUP response. The player sends an RTSP PLAY request, say, for low-fidelity audio, and the server sends an RTSP PLAY response. At this point, the streaming server pumps the low-fidelity audio into its own in-band channel. Later, the media player sends an RTSP PAUSE request, and the server responds with an RTSP PAUSE response. When the user is finished, the media player sends an RTSP TEARDOWN request, and the server responds with an RTSP TEARDOWN response. Each RTSP session has a session identifier, which is chosen by the server. The client initiates the session with the SETUP request, and the server responds to the request with an identifier. The client repeats the session identifier for each request, until the client closes the session with the TEARDOWN request. The following is a simplified example of an RTSP session between a client (C:) and a sender (S:). C: SETUP rtsp://audio.example.com/twister/audio RTSP/1.0 Transport: rtp/udp; compression; port=3056; mode=PLAY S: RTSP/1.0 200 1 OK Session 4231 C: PLAY rtsp://audio.example.com/twister/audio.en/lofi RTSP/1.0 Session: 4231 Range: npt=0- C: PAUSE rtsp://audio.example.com/twister/audio.en/ lofi RTSP/1.0 Session: 4231 Range: npt=37 C: TEARDOWN rtsp://audio.example.com/twister/audio.en/ lofi RTSP/1.0 Session: 4231 S: 200 3 OKNotice that in this example, the player chose not to play back the complete presentation, but instead only the low-fidelity portion of the presentation. The RTSP protocol is actually capable of doing much more than described in this brief introduction. In particular, RTSP has facilities that allow clients to stream toward the server (for example, for recording). RTSP has been adopted by RealNetworks, currently the industry leader in audio/video streaming. Henning Schulzrinne makes available a Web page on RTSP [Schulzrinne 1999]. |