In the previous

section we discussed how RSVP can be used to reserve per-flow resources

at routers within the network. The ability to request and reserve per-flow

resources, in turn, makes it possible for the Intserv framework to provide

quality-of-service guarantees to individual flows. As work on Intserv and

RSVP proceeded, however, researchers involved with these efforts (for example,

[Zhang

1998]) have begun to uncover some of the difficulties associated with

the Intserv model and per-flow reservation of resources:

-

Scalability.

Per-flow resource reservation using RSVP implies the need for a router

to process resource reservations and to maintain per-flow state for each

flow passing though the router. With recent measurements [Thomson

1997] suggesting that even for an OC-3 speed link, approximately 256,000

source-destination pairs might be seen in one minute in a backbone router,

per-flow reservation processing represents a considerable overhead in large

networks.

-

Flexible service

models. The Intserv framework provides for a small number of prespecified

service classes. This particular set of service classes does not allow

for more qualitative or relative definitions of service distinctions (for

example, "Service class A will receive preferred treatment over service

class B."). These more qualitative definitions might better fit our intuitive

notion of service distinction (for example, first class versus coach class

in air travel; "platinum" versus "gold" versus "standard" credit cards).

These considerations

have led to the recent so-called "diffserv" (Differentiated Services) activity

[Diffserv

1999] within the Internet Engineering Task Force. The Diffserv working

group is developing an architecture for providing scalable and flexible

service differentiation--that is, the ability to handle different "classes"

of traffic in different ways within the Internet. The need for scalability

arises from the fact that hundreds of thousands of simultaneous source-destination

traffic flows may be present at a backbone router of the Internet. We will

see shortly that this need is met by placing only simple functionality

within the network core, with more complex control operations being implemented

at the "edge" of the network. The need for flexibility arises from

the fact that new service classes may arise and old service classes may

become obsolete. The differentiated services architecture is flexible in

the sense that it does not define specific services or service classes

(for example, as is the case with Intserv). Instead, the differentiated

services architecture provides the functional components, that is, the

pieces of a network architecture, with which such services can be built.

Let us now examine these components in detail.

6.9.1: Differentiated

Services: A Simple Scenario

To set the framework

for defining the architectural components of the differentiated service

model, let us begin with the simple network shown in Figure 6.40. In the

following, we describe one possible use of the Diffserv components. Many

other possible variations are possible, as described in RFC 2475. Our goal

here is to provide an introduction to the key aspects of differentiated

services, rather than to describe the architectural model in exhaustive

detail.

Figure 6.40:

A simple diffserv network example

The differentiated

services architecture consists of two sets of functional elements:

-

Edge functions:

Packet classification and traffic conditioning. At the incoming "edge"

of the network (that is, at either a Diffserv-capable host that generates

traffic or at the first Diffserv-capable router that the traffic passes

through), arriving packets are marked. More specifically, the Differentiated

Service (DS) field of the packet header is set to some value. For example,

in Figure 6.40, packets being sent from H1 to H3 might be marked at R1,

while packets being sent from H2 to H4 might be marked at R2. The mark

that a packet receives identifies the class of traffic to which it belongs.

Different classes of traffic will then receive different service within

the core network. The RFC defining the differentiated service architecture,

RFC 2475, uses the term behavior aggregate rather than "class of

traffic." After being marked, a packet may then be immediately forwarded

into the network, delayed for some time before being forwarded, or it may

be discarded. We will see shortly that many factors can influence how a

packet is to be marked, and whether it is to be forwarded immediately,

delayed, or dropped.

-

Core function:

Forwarding. When a DS-marked packet arrives at a Diffserv-capable router,

the packet is forwarded onto its next hop according to the so-called per-hop

behavior associated with that packet's class. The per-hop behavior

influences how a router's buffers and link bandwidth are shared among the

competing classes of traffic. A crucial tenet of the Diffserv architecture

is that a router's per-hop behavior will be based only on packet

markings, that is, the class of traffic to which a packet belongs. Thus,

if packets being sent from H1 to H3 in Figure 6.40 receive the same marking

as packets from H2 to H4, then the network routers treat these packets

as an aggregate, without distinguishing whether the packets originated

at H1 or H2. For example, R3 would not distinguish between packets from

H1 and H2 when forwarding these packets on to R4. Thus, the differentiated

service architecture obviates the need to keep router state for individual

source-destination pairs--an important consideration in meeting the scalability

requirement discussed at the beginning of this section.

An analogy might

prove useful here. At many large-scale social events (for example, a large

public reception, a large dance club or discothèque, a concert,

a football game), people entering the event receive a "pass" of one type

or another. There are VIP passes for Very Important People; there are over-18

passes for people who are 18 years old or older (for example, if alcoholic

drinks are to be served); there are backstage passes at concerts; there

are press passes for reporters; there is an ordinary pass for the Ordinary

Person. These passes are typically distributed on entry to the event, that

is, at the "edge" of the event. It is here at the edge where computationally

intensive operations such as paying for entry, checking for the appropriate

type of invitation, and matching an invitation against a piece of identification,

are performed. Furthermore, there may be a limit on the number of people

of a given type that are allowed into an event. If there is such a limit,

people may have to wait before entering the event. Once inside the event,

one's pass allows one to receive differentiated service at many locations

around the event--a VIP is provided with free drinks, a better table, free

food, entry to exclusive rooms, and fawning service. Conversely, an Ordinary

Person is excluded from certain areas, pays for drinks, and receives only

basic service. In both cases, the service received within the event depends

solely on the type of one's pass. Moreover, all people within a class are

treated alike.

6.9.2: Traffic

Classification and Conditioning

In the differentiated

services architecture, a packet's mark is carried within the DS field in

the IPv4 or IPv6 packet header. The definition of the DS field is intended

to supersede the earlier definitions of the IPv4 Type-of-Service field

(see Section 4.4) and the IPv6 Traffic Class Field (see Section 4.7). The

structure of this eight-bit field is shown below in Figure 6.41.

Figure 6.41:

Structure of the DS field in IVv4 and IPv6 header

The six-bit

differentiated service code point (DSCP) subfield determines the so-called

per-hop behavior (see Section 6.9.3) that the packet will receive within

the network. The two-bit CU subfield of the DS field is currently unused.

Restrictions are placed on the use of half of the DSCP values in order

to preserve backward compatibility with the IPv4 ToS field use; see RFC

2474 for details. For our purposes here, we need only note that a packet's

mark, its "code point" in the Diffserv terminology, is carried in the eight-bit

Diffserv field.

As noted above,

a packet is marked by setting its Diffserv field value at the edge of the

network. This can either happen at a Diffserv-capable host or at the first

point at which the packet encounters a Diffserv-capable router. For our

discussion here, we will assume marking occurs at an edge router that is

directly connected to a sender, as shown in Figure 6.40.

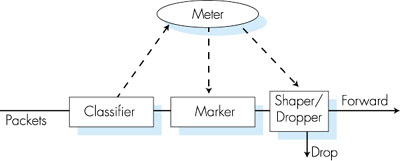

Figure 6.42

provides a logical view of the classification and marking function within

the edge router. Packets arriving to the edge router are first "classified."

The classifier selects packets based on the values of one or more packet

header fields (for example, source address, destination address, source

port, destination port, protocol ID) and steers the packet to the appropriate

marking function. The DS field value is then set accordingly at the marker.

Once packets are marked, they are then forwarded along their route to the

destination. At each subsequent Diffserv-capable router, marked packets

then receive the service associated with their marks. Even this simple

marking scheme can be used to support different classes of service within

the Internet. For example, all packets coming from a certain set of source

IP addresses (for example, those IP addresses that have paid for an expensive

priority service within their ISP) could be marked on entry to the ISP,

and then receive a specific forwarding service (for example, a higher priority

forwarding) at all subsequent Diffserv-capable routers. A question not

addressed by the Diffserv working group is how the classifier obtains the

"rules" for such classification. This could be done manually, that is,

the network administrator could load a table of source addresses that are

to be marked in a given way into the edge routers, or this could be done

under the control of some yet-to-be-specified signaling protocol.

Figure 6.42:

Simple packet classification and marking

In Figure 6.42,

all packets meeting a given header condition receive the same marking,

regardless of the packet arrival rate. In some scenarios, it might also

be desirable to limit the rate at which packets bearing a given marking

are injected into the network. For example, an end user might negotiate

a contract with its ISP to receive high-priority service, but at the same

time agree to limit the maximum rate at which it would send packets into

the network. That is, the end user agrees that its packet sending rate

would be within some declared traffic profile. The traffic profile

might contain a limit on the peak rate, as well as the burstiness of the

packet flow, as we saw in Section 6.6 with the leaky bucket mechanism.

As long as the user sends packets into the network in a way that conforms

to the negotiated traffic profile, the packets receive their priority marking.

On the other hand, if the traffic profile is violated, the out-of-profile

packets might be marked differently, might be shaped (for example, delayed

so that a maximum rate constraint would be observed), or might be dropped

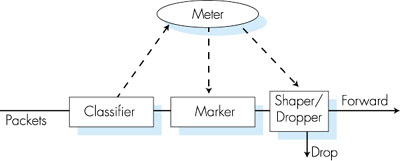

at the network edge. The role of the metering function, shown in

Figure 6.43, is to compare the incoming packet flow with the negotiated

traffic profile and to determine whether a packet is within the negotiated

traffic profile. The actual decision about whether to immediately re-mark,

forward, delay, or drop a packet is not specified in the Diffserv

architecture. The Diffserv architecture only provides the framework for

performing packet marking and shaping/dropping; it does not mandate

any specific policy for what marking and conditioning (shaping or dropping)

is actually to be done. The hope, of course, is that the Diffserv architectural

components are together flexible enough to accommodate a wide and constant

evolving set of services to end users. For a discussion of a policy framework

for Diffserv, see [Rajan

1999].

Figure 6.43:

Logical view of packet classification and traffic conditioning at the edge

router

6.9.3: Per-Hop

Behaviors

So far, we have

focused on the edge functions in the differentiated services architecture.

The second key component of the Diffserv architecture involves the per-hop

behavior performed by Diffserv-capable routers. The per-hop behavior (PHB)

is rather cryptically, but carefully, defined as "a description of the

externally observable forwarding behavior of a Diffserv node applied to

a particular Diffserv behavior aggregate" [RFC

2475]. Digging a little deeper into this definition, we can see several

important considerations embedded within:

-

A PHB can result

in different classes of traffic receiving different performance (that is,

different externally observable forwarding behavior).

-

While a PHB defines

differences in performance (behavior) among classes, it does not mandate

any particular mechanism for achieving these behaviors. As long as the

externally observable performance criteria are met, any implementation

mechanism and any buffer/bandwidth allocation policy can be used. For example,

a PHB would not require that a particular packet queuing discipline, for

example, a priority queue versus a weighted-fair-queuing queue versus a

first-come-first-served queue, be used to achieve a particular behavior.

The PHB is the "end," to which resource allocation and implementation mechanisms

are the "means."

-

Differences in

performance must be observable, and hence measurable.

An example of a

simple PHB is one that guarantees that a given class of marked packets

receive at least x% of the outgoing link bandwidth over some interval

of time. Another per-hop behavior might specify that one class of traffic

will always receive strict priority over another class of traffic--that

is, if a high-priority packet and a low-priority packet are present in

a router's queue at the same time, the high-priority packet will always

leave first. Note that while a priority queuing discipline might be a natural

choice for implementing this second PHB, any queuing discipline that implements

the required observable behavior is acceptable.

Currently, two

PHBs are under active discussion within the Diffserv working group: an

expedited forwarding (EF) PHB [RFC

2598] and an assured forwarding (AF) PHB [RFC

2597]:

-

The expedited

forwarding PHB specifies that the departure rate of a class of traffic

from a router must equal or exceed a configured rate. That is, during any

interval of time, the class of traffic can be guaranteed to receive enough

bandwidth so that the output rate of the traffic equals or exceeds this

minimum configured rate. Note that the EF per-hop behavior implies some

form of isolation among traffic classes, as this guarantee is made independently

of the traffic intensity of any other classes that are arriving to a router.

Thus, even if the other classes of traffic are overwhelming router and

link resources, enough of those resources must still be made available

to the class to ensure that it receives its minimum rate guarantee. EF

thus provides a class with the simple abstraction of a link with

a minimum guaranteed link bandwidth.

-

The assured

forwarding PHB is more complex. AF divides traffic into four classes,

where each AF class is guaranteed to be provided with some minimum amount

of bandwidth and buffering. Within each class, packets are further partitioned

into one of three "drop preference" categories. When congestion occurs

within an AF class, a router can then discard (drop) packets based on their

drop preference values. See RFC 2597 for details. By varying the amount

of resources allocated to each class, an ISP can provide different levels

of performance to the different AF traffic classes.

The AF PHB could

be used as a building block to provide different levels of service to the

end systems, for example, Olympic-like gold, silver, and bronze classes

of service. But what would be required to do so? If gold service is indeed

going to be "better" (and presumably more expensive!) than silver service,

then the ISP must ensure that gold packets receive lower delay and/or loss

than silver packets. Recall, however, that a minimum amount of bandwidth

and buffering are to be allocated to each class. What would happen

if gold service was allocated x% of a link's bandwidth and silver

service was allocated x/2% of the link's bandwidth, but the traffic

intensity of gold packets was 100 times higher than that of silver packets?

In this case, it is likely that silver packets would receive better

performance than the gold packets! (This is an outcome that leaves the

silver service buyers happy, but the high-spending gold service buyers

extremely unhappy!) Clearly, when creating a service out of a PHB, more

than just the PHB itself will come into play. In this example, the dimensioning

of resources--determining how much resources will be allocated to each

class of service--must be done hand-in-hand with knowledge about the traffic

demands of the various classes of traffic.

6.9.4: A Beginning

The differentiated

services architecture is still in the early stages of its development and

is rapidly evolving. RFCs 2474 and 2475 define the fundamental framework

of the Diffserv architecture but themselves are likely to evolve as well.

The ways in which PHBs, edge functionality, and traffic profiles can be

combined to provide an end-to-end service, such as a virtual leased line

service [RFC

2638] or an Olympic-like gold/silver/bronze service [RFC

2597], are still under investigation. In our discussion above, we have

assumed that the Diffserv architecture is deployed within a single administrative

domain. The (typical) case where an end-to-end service must be fashioned

from a connection that crosses several administrative domains, and through

non-Diffserv-capable routers, pose additional challenges beyond those described

above. |